Task 5: Automatic speech recognition

Task definition

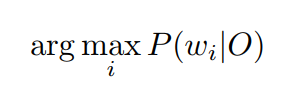

This task deals with automatically transcribing an audio recording containing speech in a noisy environment. Automatic speech recognition is commonly defined as the process of automatically determining the most likely sequence of words given some sequence of observations available in the form of audio. A common formula used to describe the process is:

where O is a sequence of acoustic observation vectors derived using various signal processing techniques - common methods include Mel-Frequency Filterbank and Mel-Frequency Cepstral Coefficients (MFCC)[11]. It is worth noting the the length of the O sequence and the number of words on the output (indexed by i) can be and is usually very different, although it is safe to assume that the the length of O is longer than the number of words. Other common terms used to describe the process is “voice recognition”, “speech-to-text” or simply “automatic transcription”.

While it is possible (and in some cases may be useful) to derive other information about this process (e.g. identifying speakers, determining the phonetics behind the words, recognizing other speech and non-speech events) this task will evaluate only the sequence of words. No other information will be evaluated.

The described problem is usually solved using a Large-Vocabulary Continuous Speech Recognition (LVSCR) system capable of transcribing speech in a pre-determined domain. Common techniques for solving this problem include:

- Hidden Markov Models [10]

- Weighted Finite State Transducers [7]

- Artificial Neural Networks [4]

- Recurrent Neural Networks [1]

- Deep Neural Networks [2]

- End-to-end models [3]

Some examples of usable and open-source solutions include:

- HTK - http://htk.eng.cam.ac.uk/

- CMU Sphinx - https://cmusphinx.github.io/

- Kaldi - http://kaldi-asr.org/

- RASR - https://www-i6.informatik.rwth-aachen.de/rwth-asr/

- Mozilla DeepSpeech - https://github.com/mozilla/DeepSpeech

Many of these website contain tutorials and documentation that explain the whole field in much more detail. Participants are encouraged to discover other, lesser known solutions especially utilizing various popular ML frameworks or to submit results using their own personal systems.

Training data

There are two types of subtasks available:

Fixed competition

Participants are allowed to use only the following corpora:

- Clarin-PL speech corpus [5] - https://mowa.clarin-pl.eu/korpusy

- PELCRA parilamentary corpus [9] - https://tinyurl.com/PELCRA-PARL

- A collection of 97 hours of parliamentary speeches published on the ClarinPL website [6] - http://mowa.clarin-pl.eu/korpusy/parlament/parlament.tar.gz

- Polish Sejm Corpus for language modeling [8] - http://clip.ipipan.waw.pl/PSC

This limitation ensures a level playing field, especially for the individuals that don’t have the resources to access commercial or otherwise private data.

Open competition

You are allowed to use any data you can find, provided that you can disclose the source, at least in a descriptive manner (e.g. type of conversation, quality of recording, etc).

You are not allowed to use any data on https://sejm.gov.pl and https://senat.gov.pl websites published after 1.1.2019!

You are not allowed to use other systems and services (e.g. cloud services and closed-source system that you didn’t develop yourself) during the evaluation phase. You may use them during the training phase, however.

Test data

The test data is available here for DOWNLOAD.

Evaluation procedure

The evaluation will be carried out using random parliamentary recordings published after 1.1.2019. Participants will be provided with a set of audio files (sampled at 16 Hz, 16-bit, linear encoding) and asked to send their automatic

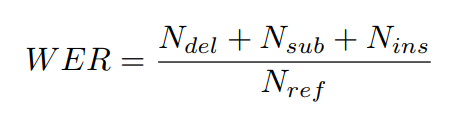

transcripts, one text file per each audio file, in UTF-8 encoding containing, normalized text, lowercase, with no punctuation. The evaluation will be carried out using the NIST SCLITE package to determine the Word Error Rate (WER) of the individual submissions. Word error rate is defined as the number of edit-distance errors (deletions, substitutions, insertions) divided by the length of the reference:

References

[1] Alex Graves. Supervised sequence labelling with recurrent neural networks. ISBN 9783642212703. URL http://books. google. com/books, 2012.

[2] Alex Graves, Abdel-rahman Mohamed, and Geoffrey Hinton. Speech recognition with deep recurrent neural networks. In Acoustics, speech and signal processing (icassp), 2013 ieee international conference on, pages 6645–6649. IEEE, 2013.

[3] Awni Hannun, Carl Case, Jared Casper, Bryan Catanzaro, Greg Diamos, Erich Elsen, Ryan Prenger, Sanjeev Satheesh, Shubho Sengupta, Adam Coates, et al. Deep speech: Scaling up end-to-end speech recognition. arXiv preprint arXiv:1412.5567, 2014.

[4] Mike M Hochberg, Steve J Renals, Anthony J Robinson, and Dan J Kershaw. Large vocabulary continuous speech recognition using a hybrid connectionist-hmm system. In Third International Conference on Spoken Language Processing, 1994.

[5] Danijel Koržinek, Krzysztof Marasek, Lukasz Brocki, and Krzysztof Wołk. Polish read speech corpus for speech tools and services. arXiv preprint arXiv:1706.00245, 2017.

[6] Krzysztof Marasek, Danijel Koržinek, and Lukasz Brocki. System for automatic transcription of sessions of the polish senate. Archives of Acoustics, 39(4):501–509, 2014.

[7] Mehryar Mohri, Fernando Pereira, and Michael Riley. Weighted finite-state transducers in speech recognition. Computer Speech & Language, 16(1):69–88, 2002.

[8] Maciej Ogrodniczuk. The polish sejm corpus. In LREC, pages 2219–2223, 2012.

[9] Piotr Pęzik. Increasing the accessibility of time-aligned speech corpora with spokes mix. In LREC, 2018.

[10] Lawrence R Rabiner. A tutorial on hidden markov models and selected applications in speech recognition. Proceedings of the IEEE, 77(2):257–286, 1989.

[11] Steve Young, Gunnar Evermann, Mark Gales, Thomas Hain, Dan Kershaw, Xunying Liu, Gareth Moore, Julian Odell, Dave Ollason, Dan Povey, et al. The htk book. Cambridge university engineering department, 3:175, 2002.