Task 1: Recognition and normalization of temporal expressions

Task definition

The aim of this task is to advance research on processing of temporal expressions, which are used in other NLP applications like question answering, summarisation, textual entailment, document classification, etc. This task follows on from previous TempEval events organised for evaluating time expressions for English and Spanish (SemEval-2013). This time we provide corpus of Polish documents fully annotated with temporal expressions. The annotation consists of boundaries, classes and normalised values of temporal expressions. Original TIMEX3 annotation guidelines are available here. The annotation for Polish texts is based on modified version of guidelines at the level of annotating boundaries/types (here) and local/global normalisation (here).

Training data

The training dataset contains 1500 documents from KPWr corpus. Each document is XML file with the given annotations. Example:

<DOCID>344245.xml</DOCID>

<DCT><TIMEX3 tid="t0" functionInDocument="CREATION_TIME" type="DATE"

value="2006-12-16"></TIMEX3></DCT>

<TEXT>

<TIMEX3 tid="t1" type="DATE" value="2006-12-16">Dziś</TIMEX3> Creative

Commons obchodzi czwarte urodziny - przedsięwzięcie ruszyło dokładnie

<TIMEX3 tid="t2" type="DATE" value="2002-12-16">16 grudnia 2002</TIMEX3>

w San Francisco. (...) Z kolei w <TIMEX3 tid="t4" type="DATE"

value="2006-12-18">poniedziałek</TIMEX3> ogłoszone zostaną wyniki

głosowanie na najlepsze blogi. W ciągu <TIMEX3 tid="t5" type="DURATION"

value="P8D">8 dni</TIMEX3> internauci oddali ponad pół miliona głosów.

Z najnowszego raportu Gartnera wynika, że w <TIMEX3 tid="t6" type="DATE"

value="2007">przyszłym roku</TIMEX3> blogosfera rozrośnie się

do rekordowego rozmiaru 100 milionów blogów. (...)

</TEXT>

Training data is available here.

Test data

The test dataset will contain about 100-200 documents. Each document follows the same TimeML format as training data, but only

<TIMEX3 tid="t0" functionInDocument="CREATION_TIME"/>annotation is given. Example:

<DOCID>344245.xml</DOCID>

<DCT><TIMEX3 tid="t0" functionInDocument="CREATION_TIME" type="DATE"

value="2006-12-16"></TIMEX3></DCT>

<TEXT>

Dziś Creative Commons obchodzi czwarte urodziny - przedsięwzięcie ruszyło

dokładnie 16 grudnia 2002 w San Francisco. (...) Z kolei w poniedziałek

ogłoszone zostaną wyniki głosowanie na najlepsze blogi. W ciągu 8 dni

internauci oddali ponad pół miliona głosów. Z najnowszego raportu Gartnera

wynika, że w przyszłym roku blogosfera rozrośnie się do rekordowego

rozmiaru 100 milionów blogów. (...)

</TEXT>

Test data is available here for DOWNLOAD.

Evaluation metrics

We utilise the same evaluation procedure as described in article [1] (section 4.1: Temporal Entity Extraction). We need to evaluate:

- How many entities are correctly identified,

- If the extents for the entities are correctly identified,

- How many entity attributes are correctly identified.

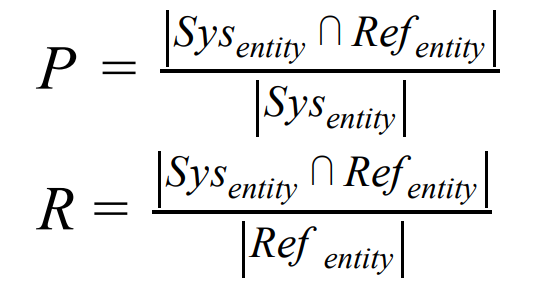

We use classical precision and recall for the recognition.

How many entities are correctly identified

We evaluate our entities using the entity-based evaluation with the equations below.

where, Sys_entity contains the entities extracted by the system that we want to evaluate, and Ref_entity contains the entities from the reference annotation that are being compared.

If the extents for the entities are correctly identified:

We compare our entities with both strict match and relaxed match. When there is an exact match between the system entity and gold entity then we call it strict match, e.g. “16 grudnia 2002” vs “16 grudnia 2002”. When there is a overlap between the system entity and gold entity then we call it relaxed match, e.g. “16 grudnia 2002” vs “2002”. When there is a relaxed match, we compare the attribute values.

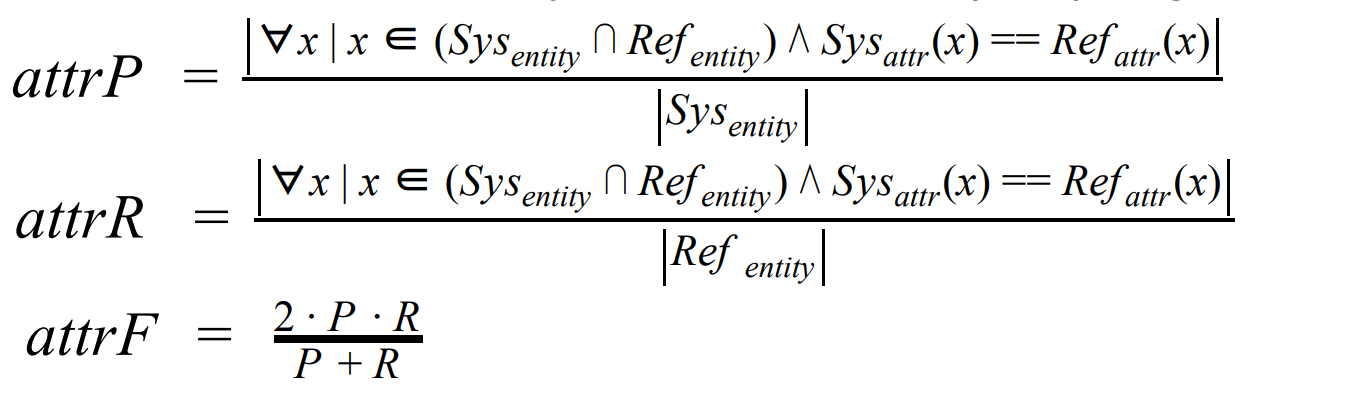

How many entity attributes are correctly identified:

We evaluate our entity attributes using the attribute F1-score, which captures how well the system identified both the entity and attribute (attr) together.

We measure P, R, F1 for both strict and relaxed match and relaxed F1 for value and type attributes. The most important metric is relaxed F1 value.

Evaluation script

We use evaluation script provided by Naushad UzZaman on GitHub:

git clone https://github.com/naushadzaman/tempeval3_toolkit.gitSemEval2013 example usage

cd tempeval3_toolkit

python TE3-evaluation.py data/gold data/system

> === Timex Performance ===

> Strict Match F1 P R

> 87.5 84.85 90.32

> Relaxed Match F1 P R

> 93.75 90.91 96.77

> Attribute F1 Value Type

> 50.0 93.75

PolEval2019 example usage

cd tempeval3_toolkit

wget http://tools.clarin-pl.eu/share/PolEval2019_TIMEX3.zip

7za x PolEval2019_TIMEX3.zip

python TE3-evaluation.py PolEval2019_TIMEX3/example/test

PolEval2019_TIMEX3/example/test-gold/

> === Timex Performance ===

> Strict Match F1 P R

> 0.0 0.0 0.0

> Relaxed Match F1 P R

> 0.0 0.0 0.0

> Attribute F1 Value Type

> 0.0 0.0

References

[1] UzZaman, N., Llorens, H., Derczynski, L., Allen, J., Verhagen, M., & Pustejovsky, J. (2013). Semeval-2013 task 1: Tempeval-3: Evaluating time expressions, events, and temporal relations. In Second Joint Conference on Lexical and Computational Semantics (* SEM), Volume 2: Proceedings of the Seventh International Workshop on Semantic Evaluation (SemEval 2013) (Vol. 2, pp. 1-9). URL